Challenges

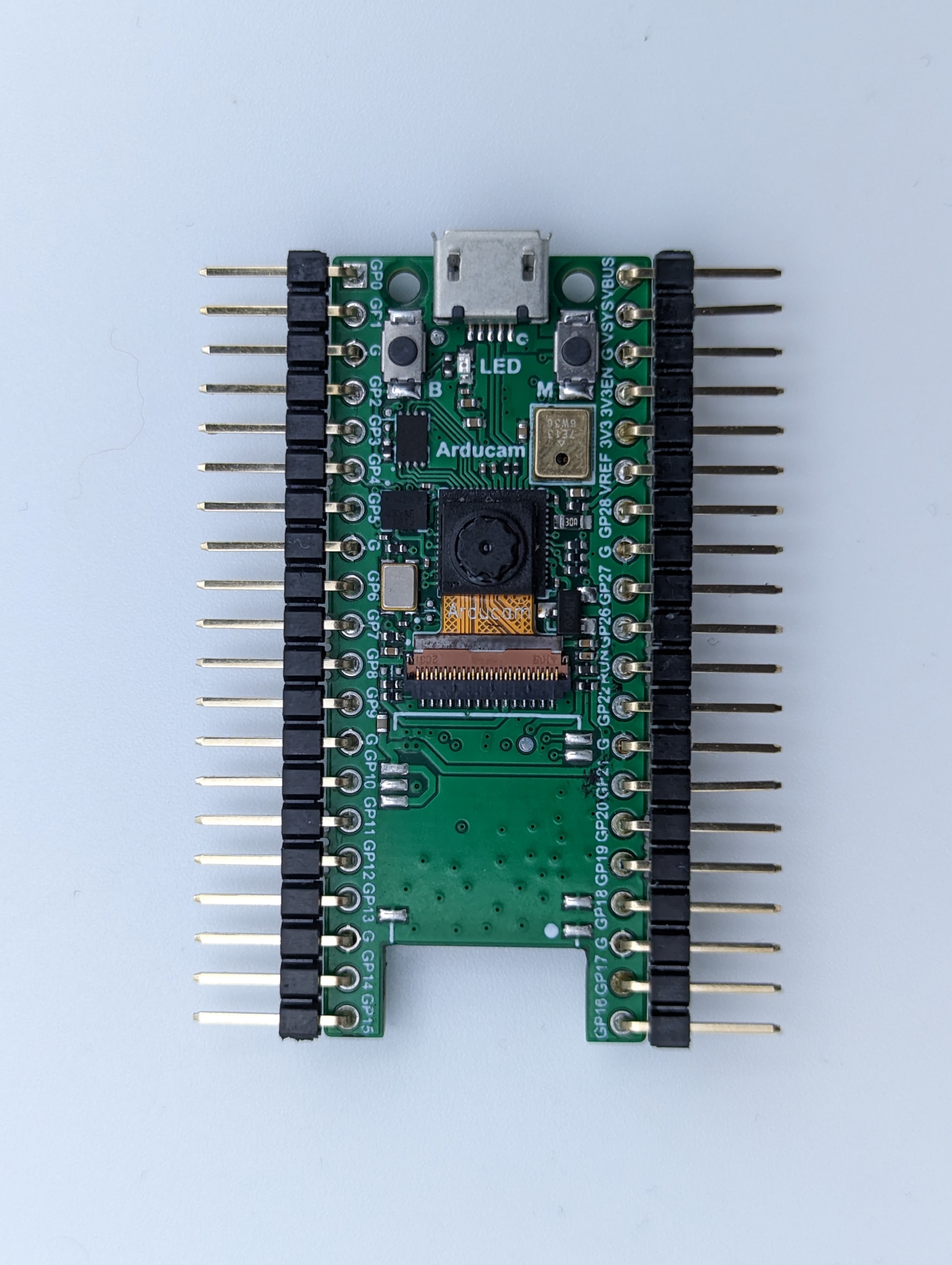

RC car being utilised is equipped with an extremely light chasis to enable wall climbing feature. The drawback associated with this is that the car is incapable of supporting any payload or sensors.

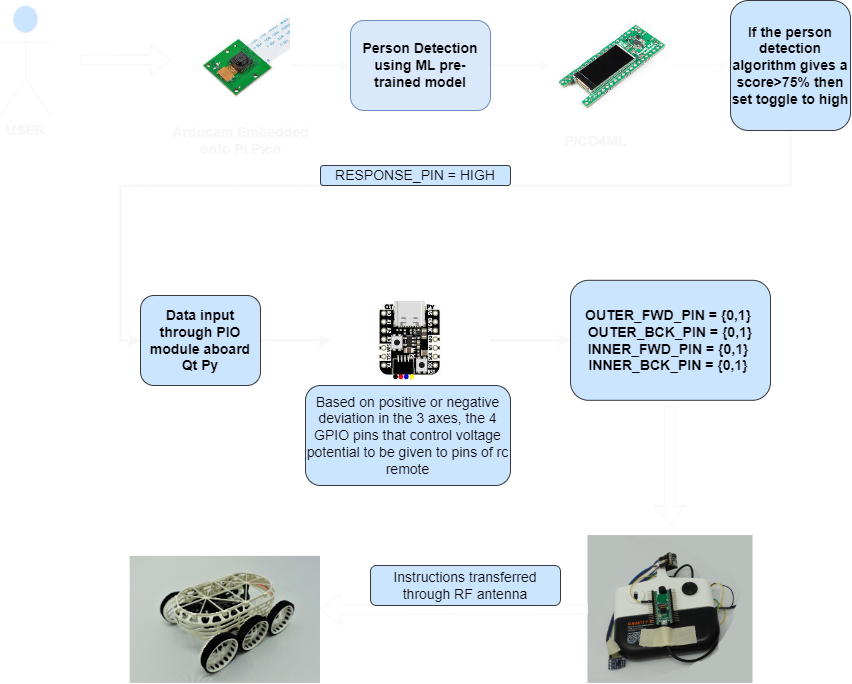

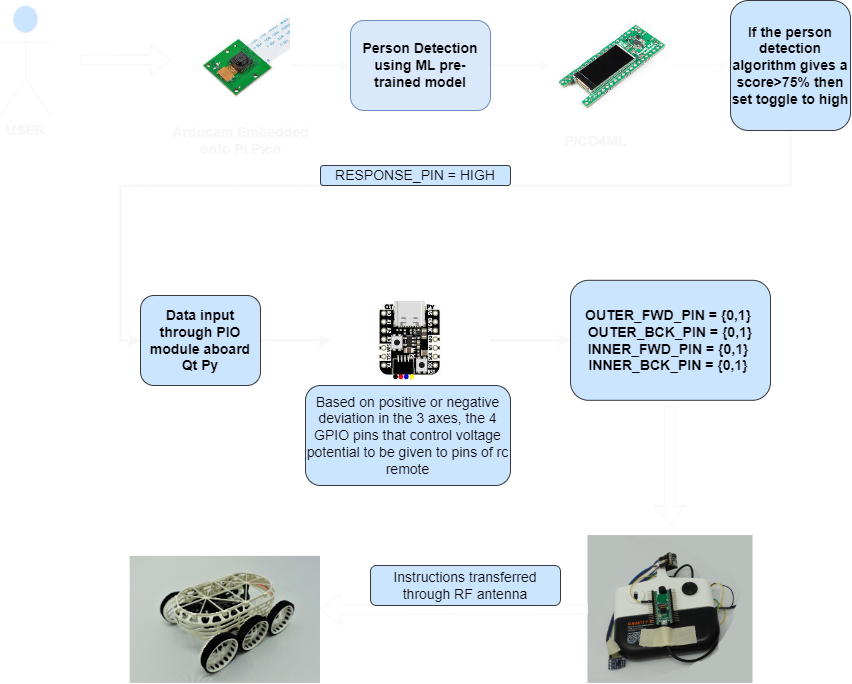

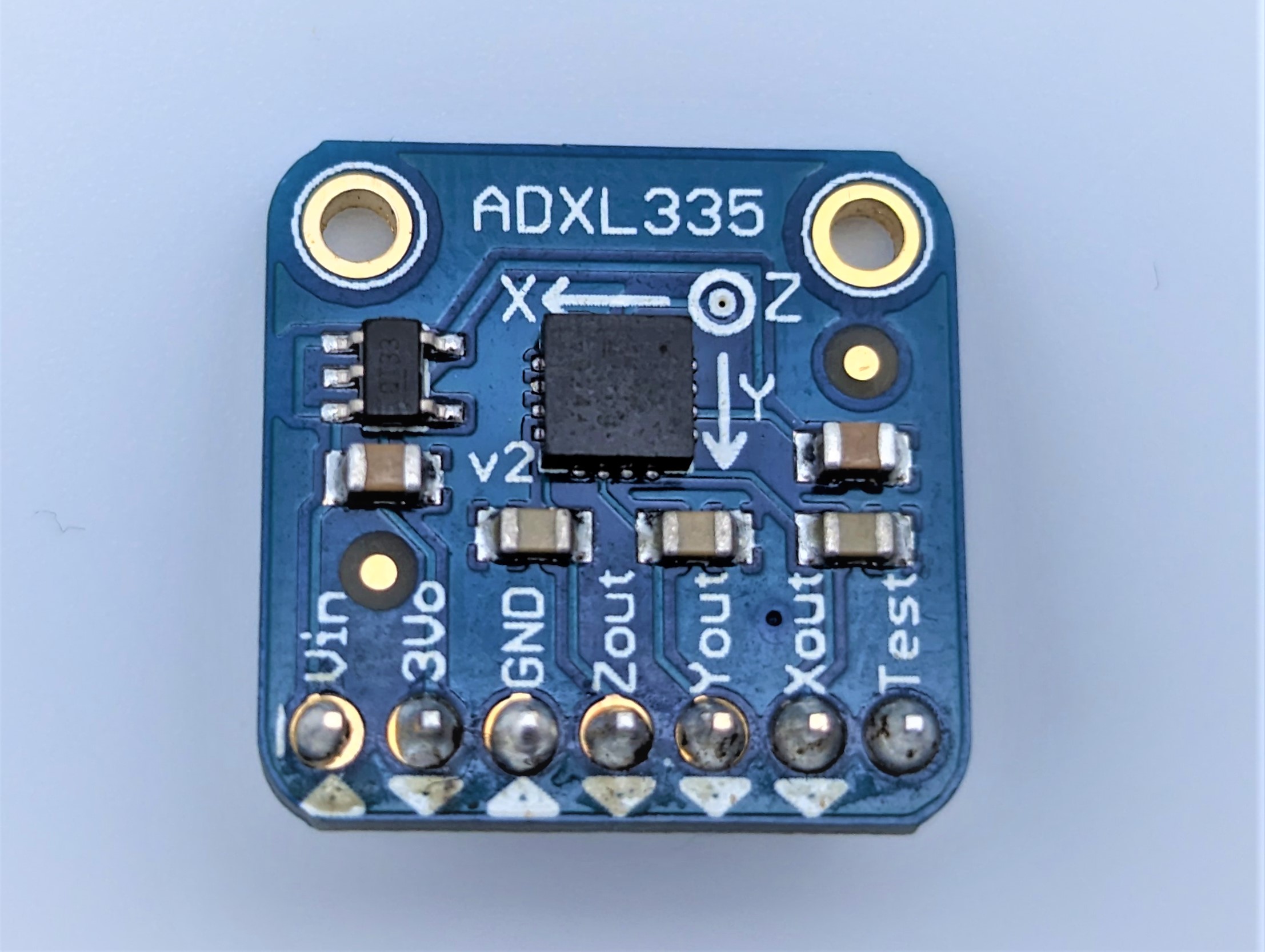

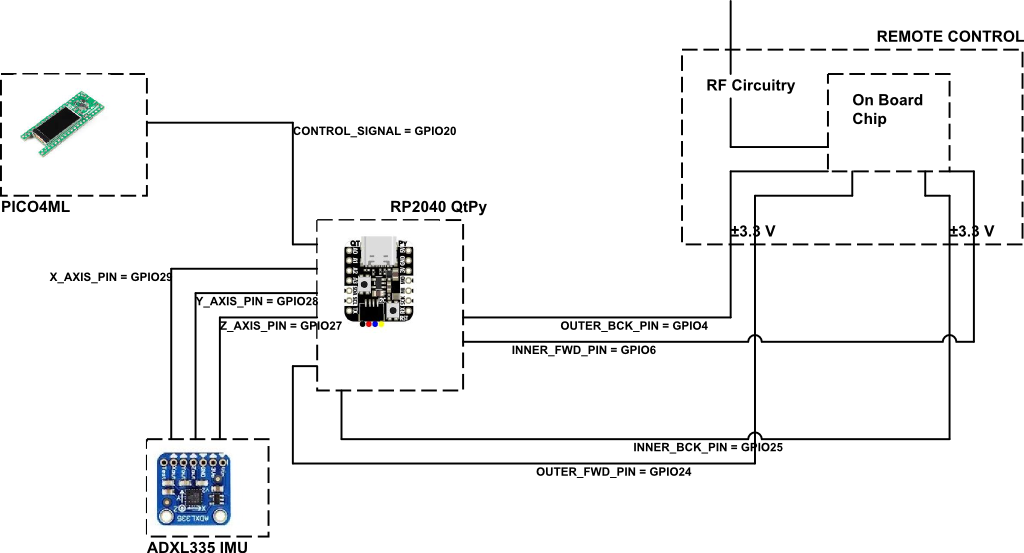

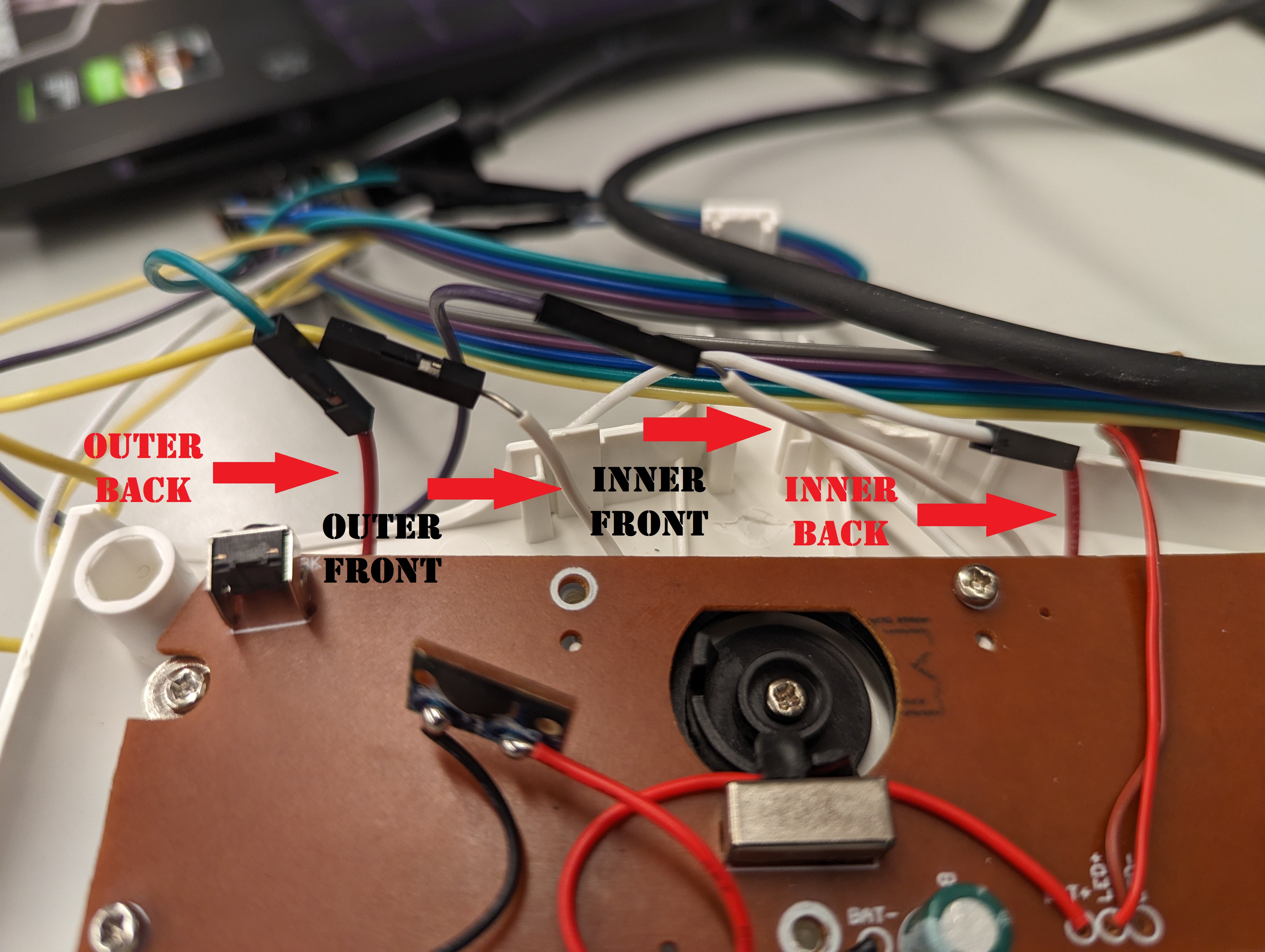

Since no sensors can mounted on the car , it was difficult to track the position of the car itself. Thus, an alternative approach was used in which the IMU is mounted on the remote control. To effectively judge the direction of gesture being performed, the current position is stored in a temporary variable and based on the difference between new and old coordinates along a particular axis, the intended motion is judged.

The challenge is to develop dual mode of operation using the same microcontroller. The functionality was divided into 2 parts: control using laptop keyboard and through standalone gesture based RC remote control.

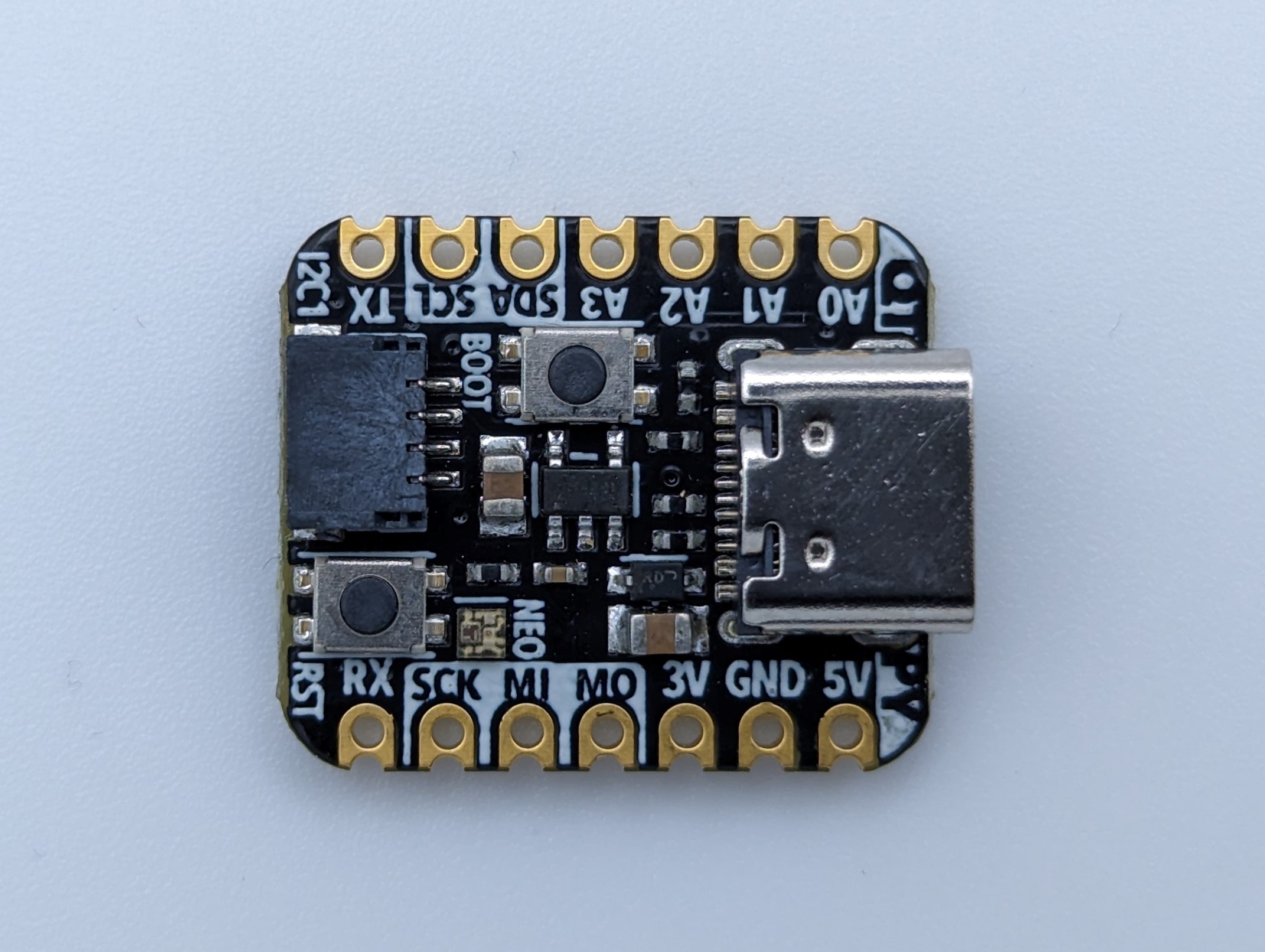

Human detecton based power on capability was one of the feature envisioned for the project. The objective is to use PICO4ML board's baked in face detection libraries to generate a toggle signal that is recieved by RP2040 Qtpy and start or stop functionlity.